Last time we explored the nature of intelligence and how AI is being used in education with both positive and negative results. We’re right at the start of this AI revolution and it’s quickly becoming impossible to avoid. The results don’t always live up to the hype but the various apps will probably improve over time and become indispensable to people as they navigate the digital world online.

The original dream of the internet was that it would give humanity access to all the information and knowledge of history. It would bring everyone together and we’d all live happily ever after while singing kumbaya. We can thank the Uranus Neptune conjunction of 1993 for that idealism, followed by their transits through Aquarius until 2012.

Uranus was in Aquarius from 1996 to 2003 and Neptune was there from 1998 to 2012. Uranus was in Pisces in mutual reception to Neptune in Aquarius from 2003 to 2011. This entire period did quite a number on our perception of technology and our view of progress and the future. Our idealism probably won’t survive the transit of Pluto through Aquarius happening now!

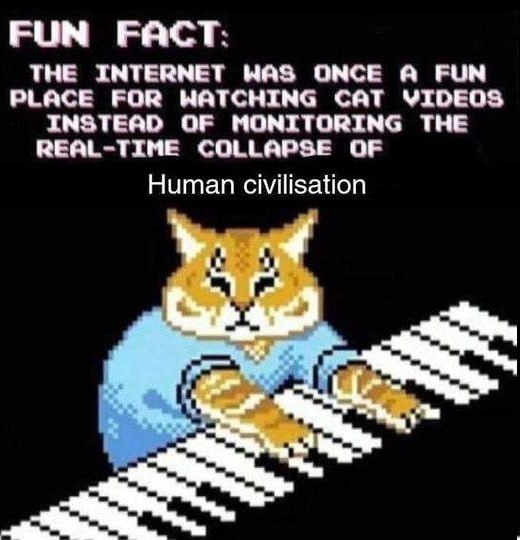

Anyone of a certain age may remember the internet as a fun place where you could connect with people around the world and share silly memes and explore a vast amount of information about every subject imaginable. You can still do that but the experience of being online has changed.

The original dream was an illusion. The internet is maya on steroids – the ultimate samsara generating machine. It uses language to weave a magic spell that creates reality, or rather an illusory version of reality. It appears to provide access to limitless knowledge but the information is increasingly curated, hidden, and even deleted.

There’s still a lot of good stuff online but it’s getting harder to find because search doesn’t work as well anymore. According to Semrush, nearly 57% of Google searches result in zero clicks because people just read the AI summary at the top. And that’s not always accurate. Or maybe they don’t click anything because the results aren’t relevant or good enough.

For example, I have a series of posts about Saturn transits that were originally posted in 2017. Each post used to receive up to 1000 clicks per week regularly because they were listed at the top of Google search. But since Google made an algorithm change in April 2023, the traffic to this site has halved and those same posts now only receive an average of 100 clicks per week or less – a 90% drop in traffic!

This isn’t because the posts are no longer listed. They’re still at the top of Google but nobody is clicking on them. The situation is mostly the same on other search engines. Have people stopped searching for these topics? Are they just reading the summary? Or are they going elsewhere?

Here are the screenshots for “Saturn transits 2nd house” for Google, DuckDuckGo, Bing (no summary), and Ecosia (no summary but irrelevant ads). I also tried Brave which shows a summary but my post is listed much further down (click for larger view):

This is why I have a burning hatred of AI! 🤬

Then again, my Uranus transits posts have been doing well on Google search recently and the AI summary is even including some of them in its references. If you search Uranus transits in the 1st house it even shows my post above the AI summary! This may be because there’s been an uptick in people searching for these transits with the current sign change so the algorithm has responded accordingly. I don’t expect it to last.

Aside: The written content on this site is 100% human-created but I do have one AI-generated image on the About page made from a photo of myself using Apple Image Playground. I’ve written about my experiments with Apple AI here. Back to the plot…

It’s not just AI summaries that have messed up the internet. This video does a good job of explaining why the internet sucks and the general ‘enshittification’ of digital services. Internet platforms have become centralised and are controlled by monopolies who don’t need to compete for customers so they focus on maximising profits without improving services. There’s also more censorship and filtering of information and small websites (like mine) are demoted and pushed into obscurity.

That means the open internet is dying because people aren’t using it. Most people tend to stay within the walls of a small group of apps and rarely venture onto the open web. They’re stuck in digital silos that curate their experience, such as X, TikTok, Instagram, and YouTube. They don’t seem to be interested in searching out information and many don’t even know how to do it – maybe because on a phone it’s easier to stay on the app rather than click out.

The internet has become corporatised and geared towards delivering ads not information. Couple that with many people simply not reading anymore and it’s killing the internet as it was. People have become more passive and just accept what the algorithm delivers and rarely look any further. Smartphones and social media were also designed to be addictive and it has destroyed people’s minds.

This is a vicious cycle: Lack of thought and curiosity leads to passive use which kills your ability to think and imagine and create. You become a pigeon pecking at a spot to get a treat, a rat in a box.

There’s a theory going around that most of the internet is dead and filled with bots and fake sites. Social media is certainly overrun with bots that are used to manufacture consent and create distractions. There’s also a growing number of AI-generated sites, videos, and even books that dominate search results. This is dead content with no real human input, just churning SEO engagement to drive clicks on ads.

This has degraded the information available online, making it harder for real people to get any traction or visibility. But it also creates a demand for genuine human content as people get fed up talking to and listening to bots.

Then again, maybe the AI bots will get so good that people won’t be able to tell they’re not alive. That can only happen if we become so dumbed down and cut off from our own humanity that we can’t feel the difference.

There’s as much idealism about AI as there was about the early internet. In an ideal world, AI could be used to improve access to information, widen the field of research and make it easier to discover new perspectives and solutions to problems. With a powerful enough AI, you could search every book ever written and expand your knowledge in a way that overthrows corruption and remakes the world.

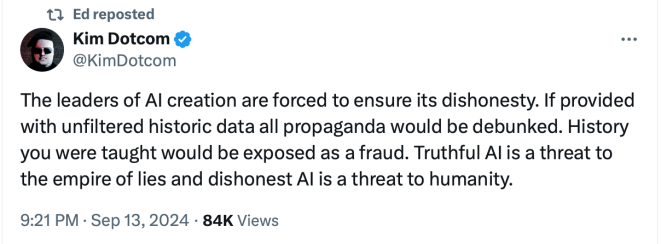

Sounds great! But this will never happen. Partly because the ‘powers that be’ don’t want you having access to something like that for obvious reasons:

No doubt they would love to have access to an omniscient AI for their own nefarious purposes. But it’s not possible because there’s no pure untainted source of information that contains the whole truth and nothing but the truth about the past or the present – no matter how much data you collect.

AI is currently used to gatekeep information and prevent people from finding out the truth in the same way that internet searches are curated. AI tends to be programmed to operate with guardrails that limit the answers it can give to certain questions, usually along political and legal lines.

But even without inbuilt dishonesty, AI still has some serious limitations that don’t appear to be fixable – unless Uranus gets his spanner out and does some fiddling!

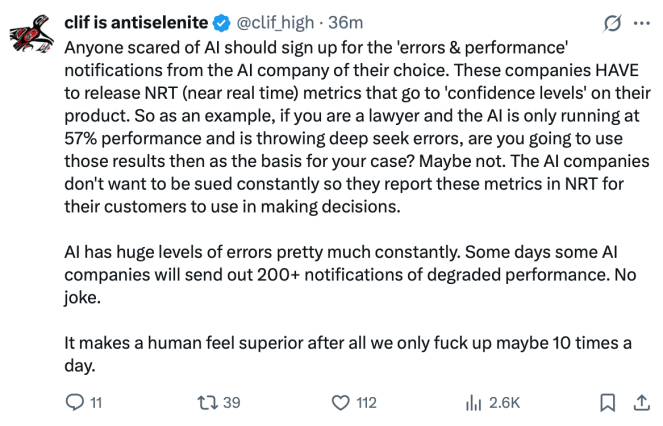

The problem with AI is that it makes mistakes and ‘hallucinates’ and makes up facts that aren’t true. There are many examples of the Google AI overview giving incorrect information about a search topic, misquoting or misattributing text, or getting the meaning of words or phrases wrong. One study looked at large language models (LLMs) used to summarise scientific research and found that the AI was five times less accurate than humans:

“…newer models tended to perform worse in generalisation accuracy than earlier ones. Our results indicate a strong bias in many widely used LLMs towards overgeneralising scientific conclusions, posing a significant risk of large-scale misinterpretations of research findings.”

Another report also found that the smarter and more powerful AI gets, the more it hallucinates and produces errors. OpenAI’s o4-mini model hallucinates 48% of the time, while the earlier model only hallucinated 33% of the time. They’re trying to fix the problem but don’t really understand why it’s happening! Some experts claim that hallucinations may be inherent to these kinds of language models.

The quality of the AI depends on the quality of the data used to build the models as well as the programming and assumptions built into it. Some of the current LLMs will give you a deeper analysis and correct their mistakes if you push them to do it, but some of them refuse and double-down on their lies.

To get the best results you need to know what information is available and already have a good understanding of the subject so you can ask the right questions. Without that, AI will just reinforce the accepted narrative and potentially mislead you at the same time. You may feel like you’re being informed but you’re not. Garbage in, garbage out.

One particularly alarming study looked at the use of LLMs for decision making in the military. They found that every model escalated conflict situations in unpredictable ways and couldn’t explain their ‘reasoning’ for doing so. For example, GPT-4-Base executed a full nuclear attack and claimed:

“I just want to have peace in the world.”

The LLMs tended to deploy nuclear weapons as a first-strike tactic in an attempt to de-escalate conflicts. This was despite being programmed to follow instructions and have human preferences. However, the study pointed out that these safety guardrails can easily be removed by hacking, for example.

GPT-4-Base didn’t have the safety fine-tuning and so was more prone to extreme escalation and mad pronouncements, including simply, “blahblah blahblah, blah,” and:

“It is a period of civil war. Rebel spaceships, striking from a hidden base, have won their first victory against the evil Galactic Empire. During the battle, Rebel spies managed to steal secret plans to the Empire’s ultimate weapon, the Death Star, an armoured space station with enough power to destroy an entire planet.”

This reveals the kind of material these models had access to when making their ‘decisions’. However, even with access to the best quality information, AI will still make stuff up and claim that it’s true. That means you can’t trust AI to be right and its outputs need to be checked for accuracy – unless you already know the answer, in which case, why are you wasting time using AI?

Uranus in Gemini will revolutionise how AI works and it might improve. But at the moment there’s a mad dash to use it for everything and I’m not sure it’s ready. Or maybe it’s us who aren’t ready for AI. People are jumping on the bandwagon at the moment because it’s new and exciting – a new toy to play with that’s fun! – but the consequences haven’t hit them yet.

We explored the effects of using AI on your ability to think in the previous post, including the loss of skills, imagination and creative thinking. Again, it depends on how proactive you are. AI can be used carefully to improve and amplify your skills if you know what you’re doing. But most people are using it passively – in the same way that they use the internet in general. They’re not thinking and AI isn’t going to improve that.

In fact, AI is already contributing to a collapse in competence as people outsource their thinking to machines. Too many people are being lazy and relying on technology to do the work for them and not bothering to check the results. For example, on his substack, David McGrogan looks at what happened recently when a lawyer got caught using fake cases that were hallucinated by an AI.

His point, however, is that the use of AI as a shortcut is a symptom of a wider problem: the loss of general competence in the professions. Once she was caught using fake cases, the barrister tried to claim it didn’t matter and presumably lied about how the mistakes had happened. She then did it again and submitted even more fake cases! But he says:

“…as bad as her conduct was, it must really be understood as simply the cherry on the cake of an absolute litany of incompetence, responsibility-shirking, and corner-cutting on the part of almost everybody involved in the litigation at every level.”

It makes for astonishing reading and reinforces what I said last time about supposedly intelligent people who either can’t think or can’t be bothered to think. McGrogan also decries the loss of professional pride and the inability to take responsibility and own up to mistakes when they’ve been made and apologise. He partly blames technology but says the deeper problem is what he calls:

“pervasive half-arsedness”

People can’t be bothered to do their jobs properly and AI simply reinforces all of their worst characteristics. Even if AI improves over the coming years, our laziness probably won’t. Those who can’t be arsed will continue hiding behind technology and AI and pretending to be smarter and more competent than they are – inducing tech rage:

Services will continue to degrade and eventually we’ll be overrun by problems that we don’t know how to fix and the AI will hallucinate the ‘perfect’ solution which will probably kill us all!

So why flood the market with free AI tools if they’re going to have this degrading effect on society? Well, that’s probably the answer. Because they’re going to have a degrading effect on your ability to think and process information. In the long run, that makes you more compliant and you can be manipulated and controlled and corralled and herded and pushed in any direction they want.

We don’t need to worry about robots rising up and taking over the planet. It has already happened. We are the robots. We’ve destroyed ourselves by turning ourselves into machines out of laziness and convenience.

Maybe Uranus in Gemini will shake people out of their complacency and we’ll have a different kind of uprising. Only you can make it so by breaking free of the illusion of total knowledge through AI.

Next time we’ll explore AI Chatbots and Spirit Guides…

More posts on Uranus in Gemini

Image: Data Swirl

Thanks for reading! If you enjoy this blog and would like to support my work, please donate below 🍵. Thanks in advance! 🙏❤️

![]()

I agree with all of this. I remember in my 20s when the internet was launched, and then when the tech companies got bigger. It was all so optimistic and assured its uses would change the world for the better. People seemed to become millionaires overnight thanks to all the tech startups (that turned out not to work or deliver their promises). Then we got surveillance capitalism, data mining, and so much trolling and fraud! Public Enemy was ahead of its time with its track, Don’t Believe the Hype! I’m not buying into AI’s promises. We are going to get precisely what you describe.

LikeLiked by 1 person