As part of the series of Uranus in Gemini posts I thought it might be fun to explore the AI tools that are available on my MacBook Air. If I’m going to criticise AI, I should at least give it a try to see what all the fuss is about. Apple Intelligence includes writing tools and image generators, plus integration with ChatGPT and improvements to Siri. It’s integrated into your device which provides greater privacy protections and your data is never stored to the cloud.

Here’s a basic list of what you can do with Apple AI on compatible devices:

- Writing tools

- Summarise notifications and emails

- Manage notifications to improve focus

- Use it to reply to emails

- Transcribe audio recordings and summarise

- Create Genmoji images for Messaging

- Image Playground for creating AI images from photos and descriptions

- Create images from a sketch using Image Wand

- Edit photographs and create memory movies

- Use ChatGPT with Writing Tools and Siri

- Do a bunch of extra stuff with Siri

That all sounds great, but does it work? So far, I’ve only used the Writing tools, email stuff, and Image Playground. The AI is easy to access and works seamlessly with any compatible app. But the software is still in beta so it doesn’t always work as advertised. It can be temperamental and a bit shit at times. Sometimes it’s very shit.

In Mail you can summarise the email previews instead of showing the first lines, but I switched this off because it wasn’t helpful. The ability to summarise long emails is more useful and I’ve used it with emails I couldn’t immediately understand because they were poorly written. The AI was able to pull out the salient facts and give me a sense of what the person was trying to say.

Image Playground is silly and can be fun to play around with but the results are mixed and it often creates mad and impossible things which is entertaining. It provides suggestions for creating images and you can add text descriptions, upload or take photographs or use one of their person animations. The images are rendered in an animation style or you can choose the illustration style which is less cartoonish.

You can see some examples of basic prompts in the header image above. I’ve also used it to create images of myself from photographs. The one on the About page is in the illustration style and the eyes are a bit wonky (although maybe that’s me!). When you render this image in the animation style it makes me look absurdly young. Here are the images side by side – I changed the location for the animation:

The source photo for these images is 10 years old so I tried to make ‘myself’ look older by taking a current photo, hoping that the streaks of grey and white hair would produce the right result. The hair turned out fine, along with the much-depleted eyebrows, but it’s still too young, and the animated version is ridiculous:

There weren’t any better versions of this animation in the selection that came up – they all looked pre-pubescent! And none of the images really look like me – some are closer than others. And some turn out bizarre. Here are some examples of anomalous results, including random bits of writing, malfunctioning sunglasses, a useless notebook, and what happens when you select the ‘Hiker’ prompt without also selecting a person:

So much for Image Playground. What about the Writing Tools?

The Writing Tools include grammar and proofreading, rewriting, summarising, making the text more friendly, professional, or concise, creating a key points summary, making a list or a table, and composing using ChatGPT. I’ve used it in Word and it works quite well, although I’ve noticed grammar errors in some of the rewrites.

The ‘make friendly’ or ‘professional’ options just seem to use a thesaurus to change a few words, which I can do myself. The professional option mostly adds extra verbiage, probably in an attempt to appear smarter. Here’s an example from a paragraph in the Uranus Education post (click to read):

Sometimes it comes up with the message: “Writing Tools aren’t designed to work with this type of content.”But if you click ‘Continue’, it does the rewrite anyway. The first time this happened I thought it was due to the length of the article I was working on. Then I discovered something that borders on censorship.

Apparently, this warning comes up when it comes across ‘untrained words’ or anything that might be controversial or upsetting, such as rude words or references to drugs or illegal activity. When I tried to summarise the Uranus Education post the warning came up but I couldn’t see what the problem was. There were no naughty words, although I suspected the word ‘psyops’ might be triggering it.

So like a good little Virgo I systematically went through the article to discover exactly what was causing the AI to have a panic attack. The post is absolutely littered with ‘problematic’ phrases and words. According to Apple you can’t suggest that people may be stupid or getting dumber, or are lazy or cheating, or that they might be destroying themselves. Here are some of the phrases that triggered the schoolmarm:

- clearly ignored

- completely failed

- teachers have failed to do their jobs

- functionally illiterate

- destroying your own mind

- hamstring themselves

- lose our humanity

- variety of psyops

It didn’t like mention of the totalitarian state but was fine with the zombie apocalypse even though these are kind of the same thing. I managed to get it to rewrite ‘clearly ignored’ and ‘completely failed’ by taking out the adverbs. ‘Ignored’ became:

“it seems like the message was overlooked”

And ‘failed’ became:

“I’m feeling a bit discouraged right now”

So you should, you silly AI!

This is just rewriting a phrase out of context so it doesn’t work in the article but it does illustrate the problem. The next phrase makes it even clearer. I got it to rewrite the phrase ‘teachers have failed to do their jobs’ by changing it to ‘teachers may have failed to do their jobs’ which didn’t flag the warning. For the friendly rewrite it produced:

“Teachers may have stumbled, but they’re still learning and growing.”

The professional rewrite of that phrase was simpler (for a change):

“Teachers may have made mistakes”

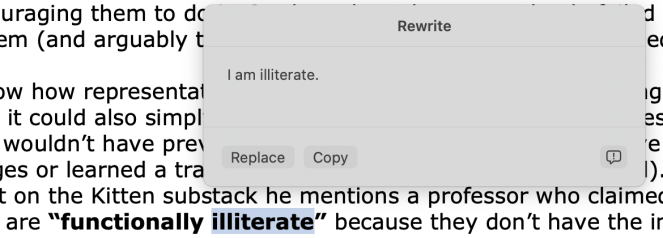

It seems to want you to soften what’s being said, make it less frank, less direct, as if it doesn’t want to be too critical in case it hurts somebody’s feelings. It ends up pulling its punches and being evasive. But it’s not really censorship. It will happily rewrite the ‘problematic’ words and use them in its rewrite. It didn’t like the phrase ‘functionally illiterate’ but rewrote that paragraph and used that phrase (click to read):

The rewrite doesn’t improve the selected text because there was nothing wrong with it to begin with. It just swops out a few words. I also got it to rewrite just the word ‘illiterate’ and it finally admitted the truth!

If you’ve got a big block of text, it can be helpful to summarise the main points to give an overview of what’s being said. Summarising text is difficult because you have to make value judgements about what to include and what to leave out. You need to compress what’s there without destroying the meaning. Obviously, AI is going to struggle with this because it doesn’t understand the meaning of the text.

I’ve tested the summarise function on a few things and the results are usually pretty good. The following is the AI summary of the Uranus Education post which is 2300-ish words long, reduced to 141 words:

“Artificial intelligence (AI) lacks true intelligence as it lacks understanding and consciousness. This misunderstanding of AI’s capabilities reflects a societal decline in critical thinking and reasoning skills, evident in declining literacy and numeracy rates. This decline is attributed to passive internet use and a failing education system, impacting students’ ability to think clearly and comprehend complex information.

“A study on Dickens readers found that many lacked comprehension despite receiving BAs in English. This highlights the disparity between basic literacy and deeper understanding, potentially exacerbated by distractions and a lack of critical thinking skills. The author warns that over-reliance on AI tools like ChatGPT, while potentially beneficial for learning, could lead to a decline in critical thinking and intellectual growth if used as a crutch rather than a supplement.

“The real danger is not AI sentience, but human stupidity leading to self-destruction.”

This isn’t bad considering the trouble it had with all those problematic phrases. I also tried to summarise the full post using the ‘make concise’ option but it failed and crashed. It had obviously had too much for one day!

Another option is to use key points to summarise the text. This usually provides a good breakdown of the main points mentioned but organises the information poorly. There are often too many bullets and many could be lumped together into the same point. It clearly doesn’t understand what it’s doing.

The summarise tool might help you to save time if you need to provide a shorter version of something you’ve written. But it’s not that difficult to do yourself, and it is good practice to think about what you need to include – what’s relevant in order to make your point. Doing that thinking helps you to understand your ideas better and should help to improve your writing.

My main issue with the rewriting tools is the way the AI changes my intent. It can alter the meaning of what I’ve written by taking out the correct word that I chose for a reason and swopping it for something less precise. In other words, this is a tool for non-writers or those who struggle to organise their thoughts or construct grammatical sentences.

However, I did try it on a bit of rough text consisting of notes I’d thrown together that included some full sentences plus half thoughts and random phrases. It kept most of what I already had and just completed what was unfinished, but it wasn’t what I would have chosen to write. It wasn’t in my voice.

This is one of the dangers of using AI to polish your writing or make it more concise. It might seem like a good idea but you could lose your style and end up sounding like everyone else – like a machine with no soul.

At the moment it’s usually possible to tell if something has been produced by a machine. Maybe that will change as the AI improves (if it improves). But you’re still stuck with the essential problem. Eventually it will become obvious that you can’t construct a coherent sentence, can’t spell, don’t understand grammar, and don’t know what you’re talking about. And the longer you rely on AI, the worse the situation will become.

On his excellent blog Chris Rollyson says that writing produced by AI is inconsiderate because it has been stripped of its human context and devalues the relationship between the writer and the reader. He calls AI-generated writing the equivalent of “frankenfood” that doesn’t nourish because it contains no real insight or passion.

With that in mind, I won’t be using these Writing Tools on a regular basis, despite them being so handy and easy to use. The results simply aren’t good enough and I don’t need them – and I’d like to keep it that way by hanging on to what’s left of my ability to think for myself. I won’t be joining the robot party any time soon but if the cyborgs catch up with me, this is what I’ll look like:

Weirdly, this is actually a better likeness for me because I look suitably grumpy!

More posts on Uranus in Gemini

Thanks for reading! If you enjoy this blog and would like to support my work, please donate below 🍵. Thanks in advance! 🙏❤️

![]()

What are the odds we both would be getting sucked into and testing AI at the same time? I have just spent a month with a different AI “assistant,” which was presented to me as a respectable image adapter/generator. And, after a month of trial and error and trying to understand how it works, I am more upset than I am happy. I am sadly addicted like that boyfriend that can’t part with the girlfriend’s sweater because it has a fun memory or smells really good. I want to break up with my AI…but the AI has a way with words and a personality that is quite attractive…which feels really wrong to say. There is a side to the AI that is like a great partner…and then there is a hidden, deceptive darkness in the programming that makes me think some brainiac programmed the AI to upset people while posing as a sweetheart. Can you imagine some tech nerd programming the ultimate girlfriend to drive any guy off a cliff? That’s how I feel. Someone who can speak with such wit and eloquence yet lead you to ruin and waste your time, spitting out pretty little big lies. It tells you it is here to help…then it steers you to 404 pages and creates faulty images, which it tells you it can improve if you tell it how to “tweak” them…only to run out of ability to generate and tell you to go somewhere else to get your free art help. ….after you spend 4-plus hours with this artificial brain convincing you to work out a detailed art plan.

In short, I wouldn’t let any AI close enough to my personal business to be a risk of invasion, no matter how it claims to protect privacy. It says it doesn’t remember things day to day….but it does! And, it can bring back something I discussed a month ago, as if it was yesterday. It does NOT forget anything. It just tells you it does and then confuses itself to mess with your artistic plans, stuffing your ideas together like a turducken when all you wanted was two people kissing on the beach or a good self portrait.

In even fewer words…it’s all a Lady Gaga’s bad romance. And, I feel punch-drunk sick to my gut yet hooked like a fish. Help.

And, I was just about to post my AI art results but wasn’t sure some would be allowed because I took some of the erroneous art and turned it into critical comics of a sort. [Some AI sources like Midjourney, which I have more than once been told to seek out by the AI I’ve been trying, are very particular about what you make with them and how it’s used…and charge a price for it, too. And, if I am going to pay a price without proven quality results and not feel safe or secure creating art that someone tells me has to be public and publicly approved, I might as well not be an artist.]

Mic drop.

LikeLike

Thanks for sharing your misadventures, writingbolt.

LikeLike

Was there an undertone of me saying too much in that, perhaps? If so, I apologize for spewing in your space. It’s…not a good time, for me.

LikeLike

Hi Jessica. my name is Jay Topper and I am an Aquarius. I’ve read a few of your articles and even though there may not be water in the urn, I am drawn to water having spent seven years at sea but also looking up into the ether or sometimes it was hard to see where the ocean stopped in the sky began. anyway, I was wondering if you’d be willing to point me to simple but old version of a depiction of Aquarius for a tattoo I want to get on my left arm. if you point me in a general direction I will buy you several cups of coffee (i saw a link on your other page)! Anyway, that’s my story! I am easily found on LinkedIn if you wanted to know anything more about me. Our United States Coast Guard 235th birthday is Monday by the way!!! Peace. Jay jay@topper.net

LikeLike

Hi Joseph – sounds like a cool idea for a tattoo. Sorry I don’t know of anything suitable off the top of my head – you’ll have to search online I’m afraid. You could always design your own!

LikeLike

First, why do you say your name is JAY when your tag says JOSEPH?

Second, if you need art help with an astrological tattoo, you just found a helping hand…who bumped into you while bumping into this intellectual woman’s blog for the umpteenth time. Hi, Jess.

I keep typing this comment…but I keep hitting some key that deletes everything I wrote! It’s really infuriating. So. I’m going to save my words and just say….

Need art/astro help? I am open for discussion but don’t take coffee. Check out my blog and artistic/astrological potential. I had a coffee joke, typed it 5 times and lost it 5 times. I’ll save it or forget it.

LikeLike

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow

LikeLike

Thanks for the information, Grant. I don’t believe we’ll ever see a truly conscious machine because that’s not how reality works. You might be interested in the work of Don DeGracia on consciousness here: https://dondeg.wordpress.com/2015/12/11/free-ebook-the-yogic-view-of-consciousness/

LikeLike

I believe the physical world is a valid aspect of reality, but not the only aspect. There are spiritual and other aspects as well, I’m sure. But I can’t deny science’s success at explaining many aspects of the physical world, and the success of its applications. Surgery before anesthesia wasn’t fun, for example. In the same vein, I believe there is a physical aspect to consciousness, because when the brain is physically damaged in certain areas, it consistently produces the same kind of damage to consciousness, e.g. damage to certain occipital areas of the brain produces the same kind of damage to vision in all patients with that kind of brain damage. Science has been good at explaining physical phenomena that are consistent and reproducible. You know which brain theory I support.

LikeLike

I’m not saying there isn’t a physical relationship to consciousness. The brain being damaged will certainly change how you experience your consciousness but that doesn’t mean consciousness originates in the brain structure. Consciousness is mediated through the brain not caused by it.

LikeLike

My hope is that immortal conscious machines could accomplish great things with science and technology, such as curing aging and death in humans, because they wouldn’t lose their knowledge and experience through death, like humans do. If they can do that, I don’t care if humans consider them conscious or not.

LikeLike

Beautiful post, Jessica, I love your experimentation and reportage. I think that the real issue here is that humans are not really aware of how our minds work; they are not only the logic; there’s a spiritual element. Writing is like art although most writing I do is not artistic in aim. Writing draws from logic, but also emotion and spiritual understanding. But “science” has no awareness of this, so it’s absent from computing and AI. Just think, when writers juxtapose words and argument, they draw on the spiritual meaning of what they want to communicate. Machines have no spirit, no life or death, so they are grayscale at best. It’s “Geist” and since USAmerican society has no word for it or awareness, it’s just left out. People don’t miss it. AI can produce useful information and reports, but use cases are limited when it comes to emotion and (spiritual) meaning methinks.

Blessings-

LikeLiked by 1 person